Data, data, data... and visualization

↧

Who said that Business Intelligence can be boring?

↧

Open Source Business Intelligence tips in January

Now you can check latest tips on Business Intelligence Open Source, mainly Pentaho, Ctools and Saiku in Jaanuary. You can see some of this tips implemented in stratebi demos. This month with great stuff:

↧

↧

69 claves para conocer Big Data

Presentación clara, útil y muy clarificadora...

↧

SAP compra RoamBI

SAP sigue en su carrera de compras y tecnologías BI tras Business Objects, que incluía Crystal Reports y Xcelsius, con la compra de Roambi.

El mundo del BI ha cambiado en estos años y tras la gran inversión en desarrollo hecho en SAP Hana, la irrupción del Big Data y los altos precios están haciendo que no esté teniendo una adopción tan amplia como la que se podría esperar

↧

Alternativas OLAP en Hadoop?

Cada vez se están planteando más alternativas que unen el mundo analítico OLAP con Big Data. En esta entrada de Neeraj Sabharwal se hace un repaso de algunas de ellas:

- Kylin, OLAP for Big Data, step by step

- Pinot, la alternativa OLAP liberada por Linkedin

- Breakthrough OLAP performance with FiloDB Cassandra and Spark

- Druid

- Atscale

- Kyvos Insights

↧

↧

Una 'breve' Historia del Machine Learning

Hoy en día, el concepto de Machine Learning, está muy en boga, pero muchos lo entremezclan con la estadistica, las matemáticas, el Big Data, etc... para ello, que mejora hacer un repaso histórico de su evolución para conocerlo mejor

Historia del Machine Learning:

1950 — Alan Turing creates the “Turing Test” to determine if a computer has real intelligence. To pass the test, a computer must be able to fool a human into believing it is also human.

1952 — Arthur Samuel wrote the first computer learning program. The program was the game of checkers, and the IBM computer improved at the game the more it played, studying which moves made up winning strategies and incorporating those moves into its program.

1957 — Frank Rosenblatt designed the first neural network for computers (the perceptron), which simulate the thought processes of the human brain.

1967 — The “nearest neighbor” algorithm was written, allowing computers to begin using very basic pattern recognition. This could be used to map a route for traveling salesmen, starting at a random city but ensuring they visit all cities during a short tour.

1985 — Terry Sejnowski invents NetTalk, which learns to pronounce words the same way a baby does.

1990s — Work on machine learning shifts from a knowledge-driven approach to a data-driven approach. Scientists begin creating programs for computers to analyze large amounts of data and draw conclusions — or “learn” — from the results.

1997 — IBM’s Deep Blue beats the world champion at chess.

2006 — Geoffrey Hinton coins the term “deep learning” to explain new algorithms that let computers “see” and distinguish objects and text in images and videos.

2010 — The Microsoft Kinect can track 20 human features at a rate of 30 times per second, allowing people to interact with the computer via movements and gestures.

2011 — IBM’s Watson beats its human competitors at Jeopardy.

2011 — Google Brain is developed, and its deep neural network can learn to discover and categorize objects much the way a cat does.

2012 – Google’s X Lab develops a machine learning algorithm that is able to autonomously browse YouTube videos to identify the videos that contain cats.

2014 – Facebook develops DeepFace, a software algorithm that is able to recognize or verify individuals on photos to the same level as humans can.

2015 – Amazon launches its own machine learning platform.

2015 – Microsoft creates the Distributed Machine Learning Toolkit, which enables the efficient distribution of machine learning problems across multiple computers.

2015 – Over 3,000 AI and Robotics researchers, endorsed by Stephen Hawking, Elon Musk and Steve Wozniak (among many others), sign an open letter warning of the danger of autonomous weapons which select and engage targets without human intervention.

2016 – Google’s artificial intelligence algorithm beats a professional player at the Chinese board game Go, which is considered the world’s most complex board game and is many times harder than chess. The AlphaGo algorithm developed by Google DeepMind managed to win five games out of five in the Go competition.

Os dejamos también una presentación sencilla y útil sobre Data Mining y su relación con el Machine Learning

↧

Si te gusta el Big Data Analytics debes ver estas dos presentaciones

Hemos dicho, ;-)

↧

Big Data Landscape 2016

Si el mundo Big Data te parece complejo, después de ver esta imagen del ecosistema de las principales (ojo, que hay muchas mas) soluciones y tecnologías, puedes sentirte abrumado. Ver en tamaño grande

Afortunadamente, hay mucha información a nivel más introductorio que pueden ayudarte a 'aterrizar' toda esta información y darle sentido desde el punto de vista de como empezar a usarla.

Echa un vistazo a estas presentaciones que pueden ayudarte:

- Big Data para Dummies

- 69 claves del Big Data

- Una 'breve historia del Machine Learning'

↧

14 usos que tienen las aplicaciones Business Intelligence Analytics

Muchas veces hablamos de herramientas, tecnologías, arquitecturas, bases de datos, etc... pero no nos detenemos tanto en los usos y aplicaciones que todas estas herramientas y tecnologías nos proporcionan, una vez que el mundo analytics ha complementado el Business Intelligence, gracias al uso masivo de datos con técnicas estadisticas y de Machine Learning

He aquí unos ejemplos:

- Business experiments: Business experiments, experimental design and AB testing are all techniques for testing the validity of something – be that a strategic hypothesis, new product packaging or a marketing approach. It is basically about trying something in one part of the organization and then comparing it with another where the changes were not made (used as a control group). It’s useful if you have two or more options to decide between.

- Visual analytics: Data can be analyzed in different ways and the simplest way is to create a visual or graph and look at it to spot patterns. This is an integrated approach that combines data analysis with data visualization and human interaction. It is especially useful when you are trying to make sense of a huge volume of data.

- Correlation analysis: This is a statistical technique that allows you to determine whether there is a relationship between two separate variables and how strong that relationship may be. It is most useful when you ‘know’ or suspect that there is a relationship between two variables and you would like to test your assumption.

- Regression analysis: Regression analysis is a statistical tool for investigating the relationship between variables; for example, is there a causal relationship between price and product demand? Use it if you believe that one variable is affecting another and you want to establish whether your hypothesis is true.

- Scenario analysis: Scenario analysis, also known as horizon analysis or total return analysis, is an analytic process that allows you to analyze a variety of possible future events or scenarios by considering alternative possible outcomes. Use it when you are unsure which decision to take or which course of action to pursue.

- Forecasting/time series analysis: Time series data is data that is collected at uniformly spaced intervals. Time series analysis explores this data to extract meaningful statistics or data characteristics. Use it when you want to assess changes over time or predict future events based on what has happened in the past.

- Data mining: This is an analytic process designed to explore data, usually very large business-related data sets – also known as ‘big data’ – looking for commercially relevant insights, patterns or relationships between variables that can improve performance. It is therefore useful when you have large data sets that you need to extract insights from.

- Text analytics: Also known as text mining, text analytics is a process of extracting value from large quantities of unstructured text data. You can use it in a number of ways, including information retrieval, pattern recognition, tagging and annotation, information extraction, sentiment assessment and predictive analytics.

- Sentiment analysis: Sentiment analysis, also known as opinion mining, seeks to extract subjective opinion or sentiment from text, video or audio data. The basic aim is to determine the attitude of an individual or group regarding a particular topic or overall context. Use it when you want to understand stakeholder opinion.

- Image analytics: Image analytics is the process of extracting information, meaning and insights from images such as photographs, medical images or graphics. As a process it relies heavily on pattern recognition, digital geometry and signal processing. Image analytics can be used in a number of ways, such as facial recognition for security purposes.

- Video analytics: Video analytics is the process of extracting information, meaning and insights from video footage. It includes everything that image analytics can do plus it can also measure and track behavior. You could use it if you wanted to know more about who is visiting your store or premises and what they are doing when they get there.

- Voice analytics: Voice analytics, also known as speech analytics, is the process of extracting information from audio recordings of conversations. This form of analytics can analyze the topics or actual words and phrases being used, as well as the emotional content of the conversation. You could use voice analytics in a call center to help identify recurring customer complaints or technical issues.

- Monte Carlo Simulation: The Monte Carlo Simulation is a mathematical problem-solving and risk-assessment technique that approximates the probability of certain outcomes, and the risk of certain outcomes, using computerized simulations of random variables. It is useful if you want to better understand the implications and ramifications of a particular course of action or decision.

- Linear programming: Also known as linear optimization, this is a method of identifying the best outcome based on a set of constraints using a linear mathematical model. It allows you to solve problems involving minimizing and maximizing conditions, such as how to maximize profit while minimizing costs. It’s useful if you have a number of constraints such as time, raw materials, etc. and you wanted to know the best combination or where to direct your resources for maximum profit.

Visto en Forbes

↧

↧

Dos meses de interesantes posts y noticias

↧

Open Source Business Intellgence Tips in February

Now you can check latest tips on Business Intelligence Open Source, mainly Pentaho, Ctools and Saiku in February. You can see some of this tips implemented in stratebi demos. This month with great stuff:

:

- Una 'breve' Historia del Machine Learning

- 14 usos que tienen las aplicaciones Business Intelligence Analytics

- Alternatives OLAP in Hadoop?

- 69 claves para conocer Big Data

- Big Data Landscape 2016

- Customer Use Case: Emerging Big Data Technology and Data Sources↧

Una Introduccion al Gestor Documental Alfresco

↧

Herramientas para medir la reputación online en turismo

Mas que recomendables:

↧

↧

Sentiment Analysis in Jedox

Download free Social Analyticsapp, now including sentiment analysis ; trendlines: http://jedox-social-analytics.com

↧

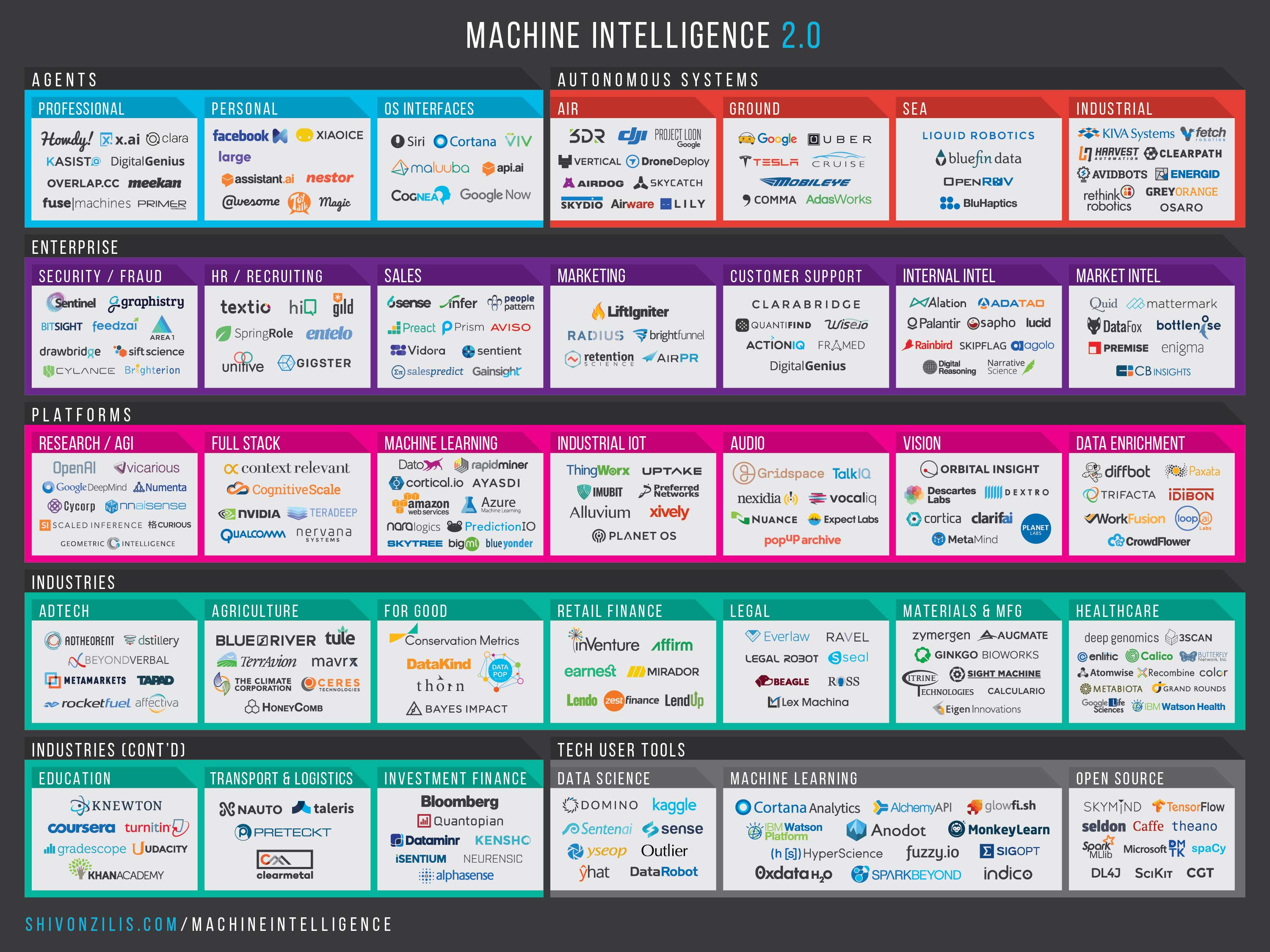

The current state of machine intelligence 2.0

↧

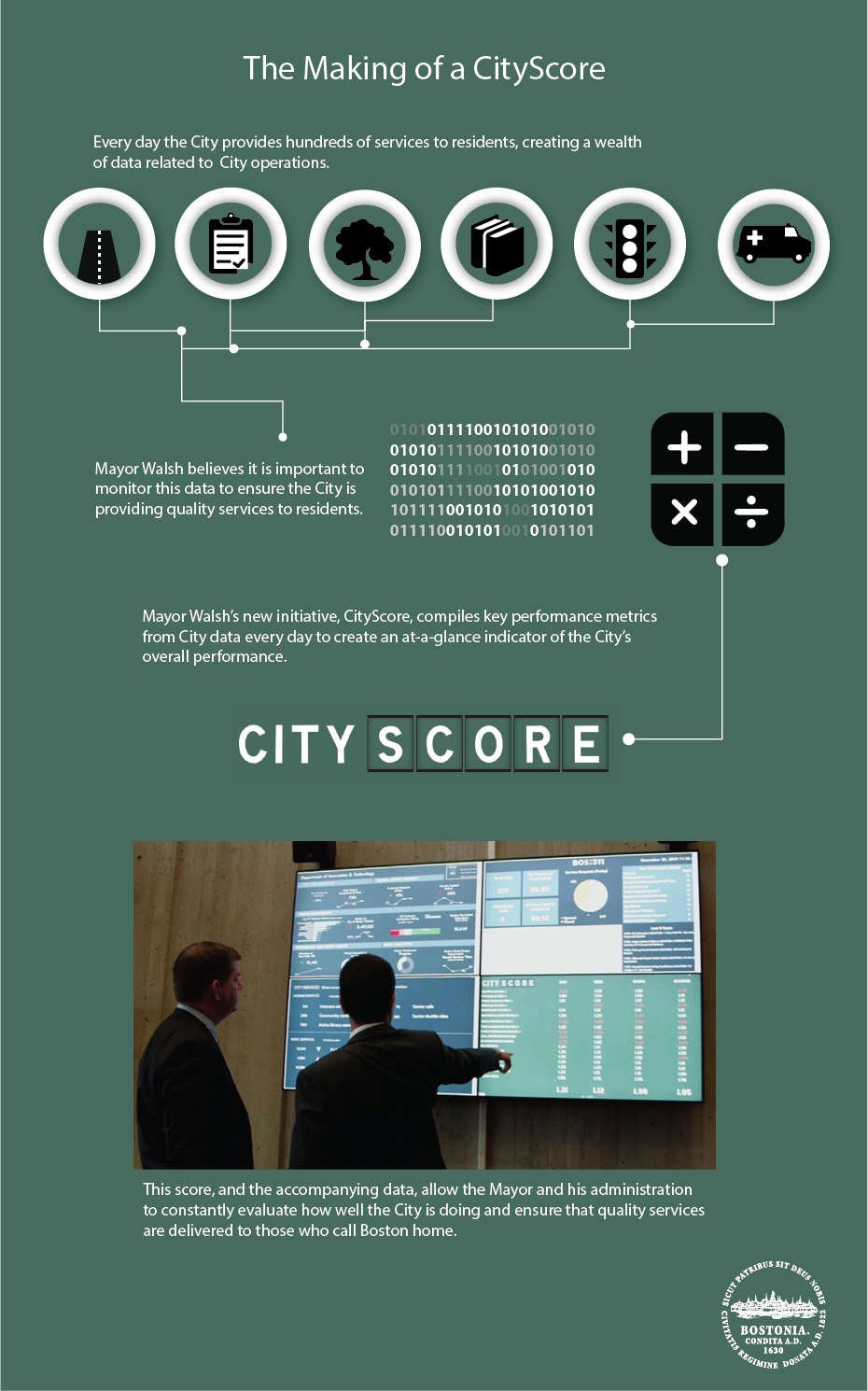

Cityscore of Boston

CityScore is a new initiative designed to inform the Mayor and city managers about the overall health of the City at a moment's notice.

By aggregating and displaying key metrics from across the City, CityScore helps the Mayor and other executives spot trends that need additional investigation, and measure the impact of changes to process and policy. Cities and city government are complex, and CityScore gives leaders easy, real-time access to a vast amount of information about the city services critical to quality of life in Boston.

↧

Talend Data Preparation, ETLs gratis para el usuario final

Se trata realmente de una herramienta de preparación y organización de los datos, más de que de una completa herramienta ETL. Pero para un tanto por ciento muy alto de usuarios finales, cubre la mayor parte de sus necesidades en cuanto a manipulación de datos

Es una herramienta gratuita de escritorio y se une a la amplia gama de herramientas Open Source que tiene Talend (Integratin, Data Quality, MDM, ESB...) y que cubren todo lo necesario para un entorno en Producción que ya usan grandes corporaciones en el mundo. Si quieres formaciones en Talend de toda la suite, los compañeros de Stratebi las realizan

Puedes usar tanto Talend como Kettle (Pentaho Data Integration), para preparar tus datos y explotarlos visualmente con informes, cubos y Dashboards con Pentaho, CTools, Saiku, STtools, etc...

Descargar gratuitamente y empezar a trabajar

Features

Single user, desktop-based application ![]()

Fully functional data preparation capabilities ![]()

Import, export, and merge Excel and CSV files ![]()

Auto-discovery, smart suggestions, and data visualization ![]()

Cleansing and enrichment functions ![]()

Completely free ![]()

↧

↧

Nueva version y Como migrar de WAQR al nuevo Reporting Adhoc en Pentaho

La posibilidad de realizar Informes Adhoc es una de las funcionalidades mas demandadas por los usuarios de Business Intelligence y, en particular, de los que usan soluciones Open Source como Pentaho.

Os contamos que esta funcionalidad ha cogido un nuevo impulso con la nueva versión de Saiku Reporting, compatible con Pentaho 5 y 6, que incluye nuevas funcionalidades, resolución de Bugs, etc...

En este whitepaper de unas 40 páginas detallamos las mismas, así como incluimos scripts y procedimientos para automatizar la migración. En el propio documento os indicamos donde obtener de forma gratuita el nuevo Reporting Adhoc y los scripts de migración (esperamos vuestras sugerencias, mejoras, ideas, etc...)

Este es el Contenido del Whitepaper:

1. INTRODUCCIÓN

Web Adhoc Query and Reporting

Saiku Reporting

Pentaho Metadata Editor

Modelo Steel Wheels

2. SAIKU REPORTING

Comparativa WAQR/Saiku Reporting/Saiku Reporting Stratebi

Resolución de bugs y mejoras de Stratebi a Saiku Reporting

3. ESTRUCTURAS DE ALMACENAMIENTO

Estructura de un fichero WAQR

Estructura de un fichero Saiku Reporting

4. AUTOMATIZACIÓN DEL PROCESO DE MIGRACIÓN

Script implementado y uso

Problemas encontrados

5. CONTACTO

En el siguiente video podéis ver a la herramienta de Reporting Adhoc en funcionamiento con Pentaho:

↧

Por que el ETL es crucial

Por favor, leed este articulo. Es una joya para todos los que trabajan en Data Warehouse, Business Intelligence, Big Data

En TodoBI nos gusta decir que en los proyectos BI, DW son como un iceberg (la parte oculta es la mas grande e importante) y se corresponde con el ETL

Un extracto del artículo:

ETL was born when numerous applications started to be used in the enterprise, roughly at the same time that ERP started being adopted at scale in the late 1980s and early 1990s.

Companies needed to combine the data from all of these applications into one repository (the data warehouse) through a process of Extraction, Transformation, and Loading. That’s the origin of ETL.

So, since these early days, ETL has essentially gotten out of control. It is not uncommon for a modest sized business to have a million lines of ETL code.

ETL jobs can be written in a programming language like Java, in Oracle’s PL/SQL or Teradata’s SQL, using platforms like Informatica, Talend, Pentaho, RedPoint, Ab Initio or dozens of others.

With respect to mastery of ETL, there are two kinds of companies:

- The ETL Masters, who have a well developed, documented, coherent approach to the ETL jobs they have

- The ETL Prisoners who are scared of the huge piles of ETL code that is crucial to running the business but which everyone is terrified to change.

↧

Las 53 Claves para conocer Machine Learning

Si hace unos días os presentábamos las 69 claves para conocer Big Data, que ya lleva más de 2.500 visitas, hoy os traemos las 53 Claves para conocer Machine Learning.

Que lo disfrutéis, si quereis conocer más o practicar, tenemos cursos

Tambien os recomendamos: Una breve historia del Machine Learning

↧