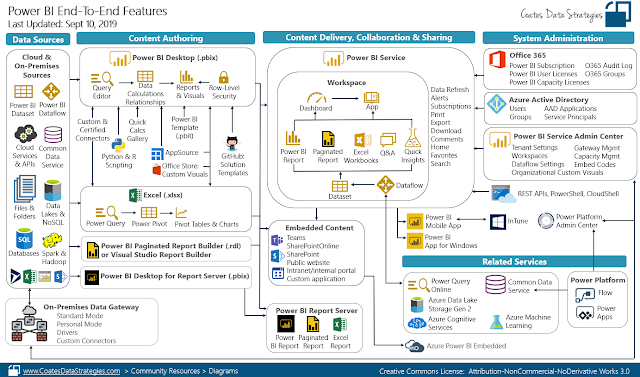

Architecture

The architecture shown here uses the following Azure services. Your own bot may not use all of these services, or may incorporate additional services.Bot logic and user experience

- Bot Framework Service (BFS). This service connects your bot to a communication app such as Cortana, Facebook Messenger, or Slack. It facilitates communication between your bot and the user.

- Azure App Service. The bot application logic is hosted in Azure App Service.

Bot cognition and intelligence

- Language Understanding (LUIS). Part of Azure Cognitive Services, LUIS enables your bot to understand natural language by identifying user intents and entities.

- Azure Search. Search is a managed service that provides a quick searchable document index.

- QnA Maker. QnA Maker is a cloud-based API service that creates a conversational, question-and-answer layer over your data. Typically, it's loaded with semi-structured content such as FAQs. Use it to create a knowledge base for answering natural-language questions.

- Web app. If your bot needs AI solutions not provided by an existing service, you can implement your own custom AI and host it as a web app. This provides a web endpoint for your bot to call.

Data ingestion

The bot will rely on raw data that must be ingested and prepared. Consider any of the following options to orchestrate this process:- Azure Data Factory. Data Factory orchestrates and automates data movement and data transformation.

- Logic Apps. Logic Apps is a serverless platform for building workflows that integrate applications, data, and services. Logic Apps provides data connectors for many applications, including Office 365.

- Azure Functions. You can use Azure Functions to write custom serverless code that is invoked by a trigger— for example, whenever a document is added to blob storage or Cosmos DB.

Logging and monitoring

- Application Insights. Use Application Insights to log the bot's application metrics for monitoring, diagnostic, and analytical purposes.

- Azure Blob Storage. Blob storage is optimized for storing massive amounts of unstructured data.

- Cosmos DB. Cosmos DB is well-suited for storing semi-structured log data such as conversations.

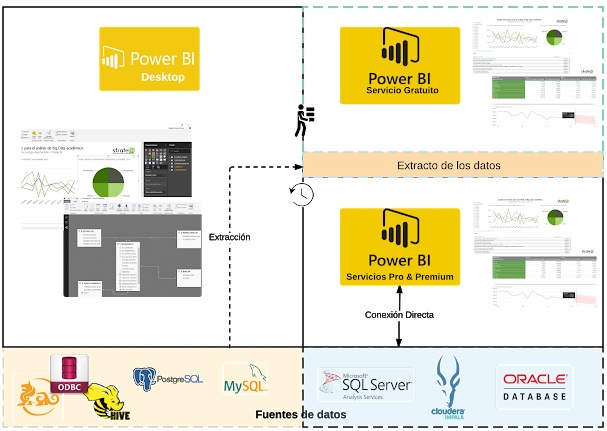

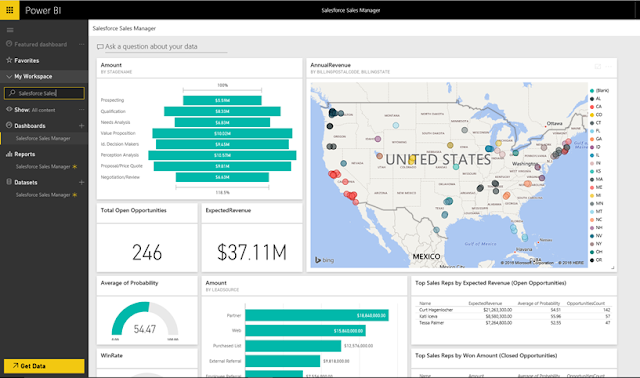

- Power BI. Use Power BI to create monitoring dashboards for your bot.

Security and governance

- Azure Active Directory (Azure AD). Users will authenticate through an identity provider such as Azure AD. The Bot Service handles the authentication flow and OAuth token management. See Add authentication to your bot via Azure Bot Service.

- Azure Key Vault. Store credentials and other secrets using Key Vault.

Quality assurance and enhancements

- Azure DevOps. Provides many services for app management, including source control, building, testing, deployment, and project tracking.

- VS Code A lightweight code editor for app development. You can use any other IDE with similar features.

Mas info